인프런 커뮤니티 질문&답변

4.3강의: Bad Request 오류 발생

해결된 질문

작성

·

273

0

답변 3

0

llm.py 코드도 올려드립니다

import streamlit as st

from dotenv import load_dotenv#환경 변수 로드

from langchain_upstage import ChatUpstage

from langchain_upstage import UpstageEmbeddings #vector 공간 활용

from langchain_pinecone import PineconeVectorStore # pinecone 데이터베이스

from pinecone import Pinecone, ServerlessSpec

from langchain.chains import RetrievalQA #답변 생성을 위해 LLM에 전달

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain_core.chat_history import BaseChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

store = {}

def get_session_history(session_id: str) -> BaseChatMessageHistory:

if session_id not in store:

store[session_id] = ChatMessageHistory()

return store[session_id]

def get_retriever():

embedding = UpstageEmbeddings(model='solar-embedding-1-large')#OpenAI에서 제공하는 Embedding Model을 활용해서 chunk를 vector화

index_name = "tax-markdown"

database = PineconeVectorStore.from_existing_index(index_name=index_name, embedding=embedding)# 이미 생성된 데이터베이스를 사용할때

#retriever = database.similarity_search(query, k=3)

retriever = database.as_retriever(search_kwargs={'k':4})

return retriever

def get_llm(model='solar-embedding-1-large'):

llm = ChatUpstage(model=model)

return llm

def get_dictionary_chain():

dictionary = ["사람을 나타내는 표현 -> 거주자"]

llm = get_llm()

prompt = ChatPromptTemplate.from_template(f"""

사용자의 질문을 보고, 우리의 사전을 참고해서 사용자의 질문을 변경해주세요.

만약 변경할 필요가 없다고 판단된다면, 사용자의 질문을 변경하지 않아도 됩니다.

그런 경우에는 질문만 리턴해주세요

사전: {dictionary}

질문: {{question}}

""")

dictionary_chain = prompt | llm | StrOutputParser()

return dictionary_chain

def get_rag_chain():

llm=get_llm()

retriever=get_retriever()

contextualize_q_system_prompt = (

"Given a chat history and the latest user question "

"which might reference context in the chat history, "

"formulate a standalone question which can be understood "

"without the chat history. Do NOT answer the question, "

"just reformulate it if needed and otherwise return it as is."

)

contextualize_q_prompt = ChatPromptTemplate.from_messages(

[

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

history_aware_retriever = create_history_aware_retriever(

llm, retriever, contextualize_q_prompt

)

system_prompt = (

"You are an assistant for question-answering tasks. "

"Use the following pieces of retrieved context to answer "

"the question. If you don't know the answer, say that you "

"don't know. Use three sentences maximum and keep the "

"answer concise."

"\n\n"

"{context}"

)

qa_prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

question_answer_chain = create_stuff_documents_chain(llm, qa_prompt)

rag_chain = create_retrieval_chain(history_aware_retriever, question_answer_chain)

conversational_rag_chain = RunnableWithMessageHistory(

rag_chain,

get_session_history,

input_messages_key="input",

history_messages_key="chat_history",

output_messages_key="answer",

).pick('answer')

return conversational_rag_chain

def get_ai_response(user_message):

dictionary_chain = get_dictionary_chain()

rag_chain = get_rag_chain()

tax_chain = {"input": dictionary_chain} | rag_chain

ai_response = tax_chain.stream(

{

"question": user_message

},

config={

"configurable": {"session_id": "abc123"}

},

)

return ai_response

#return ai_message["answer"]

def get_llm(model='solar-embedding-1-large'):

llm = ChatUpstage(model=model)여기서 모델을 넘겨주지 않는게 공식문서 가이드입니다. 만약 모델을 지정하신다면 아래 링크에 있는 모델들 중 하나를 사용하셔야해요

https://developers.upstage.ai/docs/getting-started/models#solar-llm

그리고 model 말고 model_name 을 넘겨줘야합니다! 소스코드도 같이 드릴게요~

0

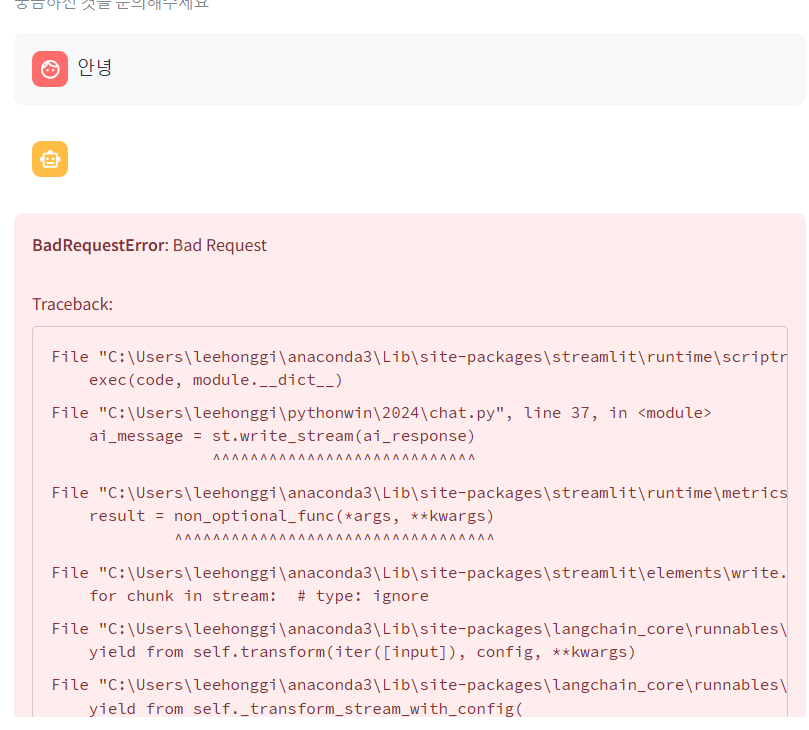

BadRequestError: Bad Request

Traceback:

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\streamlit\runtime\scriptrunner\script_runner.py", line 542, in _run_script

exec(code, module.__dict__)File "C:\Users\leehonggi\pythonwin\2024\chat.py", line 37, in <module> ai_message = st.write_stream(ai_response) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\streamlit\runtime\metrics_util.py", line 397, in wrapped_func result = non_optional_func(*args, **kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\streamlit\elements\write.py", line 159, in write_stream for chunk in stream: # type: ignore

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3262, in stream yield from self.transform(iter([input]), config, **kwargs)

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3249, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2054, in _transform_stream_with_config chunk: Output = context.run(next, iterator) # type: ignore ^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3211, in _transform for output in final_pipeline:

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\passthrough.py", line 765, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2018, in _transform_stream_with_config final_input: Optional[Input] = next(input_for_tracing, None) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 5301, in transform yield from self.bound.transform(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 5301, in transform yield from self.bound.transform(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3249, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2018, in _transform_stream_with_config final_input: Optional[Input] = next(input_for_tracing, None) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3700, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2054, in _transform_stream_with_config chunk: Output = context.run(next, iterator) # type: ignore ^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3685, in _transform chunk = AddableDict({step_name: future.result()}) ^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\concurrent\futures\_base.py", line 449, in result return self.__get_result() ^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\concurrent\futures\_base.py", line 401, in __get_result raise self._exception

File "C:\Users\leehonggi\anaconda3\Lib\concurrent\futures\thread.py", line 58, in run result = self.fn(*self.args, **self.kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3249, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2054, in _transform_stream_with_config chunk: Output = context.run(next, iterator) # type: ignore ^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 3211, in _transform for output in final_pipeline:

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\output_parsers\transform.py", line 65, in transform yield from self._transform_stream_with_config(

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 2018, in _transform_stream_with_config final_input: Optional[Input] = next(input_for_tracing, None) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\runnables\base.py", line 1290, in transform yield from self.stream(final, config, **kwargs)

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 425, in stream raise e

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_core\language_models\chat_models.py", line 405, in stream for chunk in self._stream(messages, stop=stop, **kwargs):

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\langchain_openai\chat_models\base.py", line 558, in _stream response = self.client.create(**payload) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\openai\_utils\_utils.py", line 274, in wrapper return func(*args, **kwargs) ^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\openai\resources\chat\completions.py", line 668, in create return self._post( ^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\openai\_base_client.py", line 1259, in post return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\openai\_base_client.py", line 936, in request return self._request( ^^^^^^^^^^^^^^

File "C:\Users\leehonggi\anaconda3\Lib\site-packages\openai\_base_client.py", line 1040, in _request raise self._make_status_error_from_response(err.response) from None

0

get_llm()에서는 embedding model이 아니라 chat model을 사용하셔야 합니다!https://developers.upstage.ai/docs/getting-started/quick-start#make-an-api-request