머신러닝 기초부터 탄탄히 정복하기

코코

$85,800.00

Basic / 머신러닝

4.8

(26)

이론과 실전은 다릅니다. 머신러닝의 기본 개념을 파악하고, 꼭 알아야 할 여러 모델들의 핵심 개념과 이론을 소개합니다. 그리고, 다양한 데이터를 다루어 보면서 실전에 도움되는 여러 기법들과 노하우를 공유합니다.

Basic

머신러닝

Learn MLP, CNN, and RNN, the basic framework of deep learning, quickly and easily.

PyTorch Basic Techniques

Knowledge of neural networks

Basic knowledge of deep learning

Concepts of CNN, RNN

How to design a deep learning model using Pytorch

Building a cifar10 image classification model using CNN(ResNet)

Building a movie review prediction model using RNN

Transfer Learning/AutoEncoder/Deep Learning Paper Review

Model Generalization

🙆🏻♀ Learn easily and quickly from MLP, the basic framework of deep learning, to CNN and RNN.🙆🏻♂

I organized the lectures given at Inflearn and published a book titled 'Python Deep Learning PyTorch'.

Thank you for your interest : )

(Inflearn lectures have been updated as of 2020.10.06. We will continue to update the lectures.)

http://m.yes24.com/Goods/Detail/93376077?ozsrank=10

http://mbook.interpark.com/shop/product/detail?prdNo=339742291&is1=book&is2=product

- Added a PyTorch background lecture (about 1 hour) for those who are not familiar with PyTorch.

- Added a 1-hour lecture on paper review.

Most of the artificial intelligence we talk about these days utilizes deep learning models. Neural networks, which are the basis of deep learning, are not new algorithms. They have existed for a long time, but due to the nature of learning, they have not been used much. Starting with this basic neural network, we will learn why deep learning has become popular, what its characteristics are, and further about convolutional neural networks and recurrent neural networks, which are called basic deep learning models.

Learn about the perceptron, which is called the first artificial intelligence, its limitations, and MLP that overcomes it.

You can think of MLP as the basic structure of a neural network. I will explain the learning algorithm of MLP step by step.

We talk about feed forward and back propagation and their advantages and disadvantages.

We will learn in depth about the definition of deep learning and how it differs from general neural networks.

We will cover activation functions, drop out, and batch normalization that can alleviate the gradient vanishing/overfitting problem, which is a shortcoming of NN.

We further discuss Auto-Encoders, which can not only classify but also learn about new features.

🌈 Convolutional Neural Network (CNN)

Looking at the history of deep learning, I think the most developed model is this CNN model. We will cover the CNN model that started with image classification and has made tremendous progress. We will talk about the characteristics of the learning algorithm and the differences from general NN.

We also discuss various architectures (Resnet, Densenet), initialization, optimizer techniques, and transfer learning to improve the performance of CNN.

We will cover RNN and LSTM, which are the basic models of text models (language models).

We talk about various fields where deep learning is used.

Generalization is a very big issue in AI in general, including deep learning and machine learning. It is a difficult problem that has not been solved yet. Therefore, various studies are being conducted to solve it. We will also introduce various studies on this generalization.

The existing generalization performance has been calculated as Training error - Estimated error. This is because it has been considered that the more similar the performance on the training data and the performance on the test data are, the better. However, a research result has been published that breaks this existing common sense, and the authors of the paper claim that deep learning is not learning, but 'memorizing.'

It is a well-known fact that CNN is a very effective model for image classification. This is because it captures the features of the image well with Graphical Representation Learning. However, there is a research result that this CNN does not learn the shape of the image, but learns the texture. This paper was accepted orally by ICLR, an AI top conference, without a single line of formula and only with experimental results. It is said that it has very, very high academic value.

We introduce a paper on the differences between humans and deep learning in terms of generalization, and how deep learning can generalize similarly to humans.

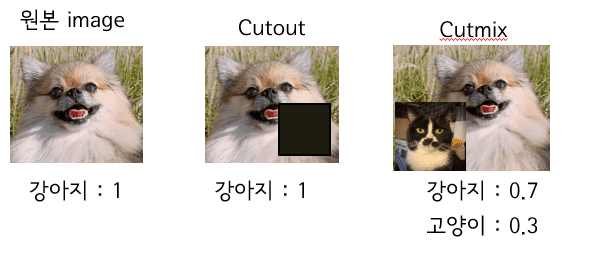

The most basic way to improve the performance of image classification and generalization is Data Augmentation. We will introduce Cutout and Cutmix as an extension of this to provide a more effective method of augmentation. In addition, we will introduce the concept of Knowledge Distillation, which is the concept of a teacher imparting knowledge to a student.

Is it right to train the image of a 'young girl' as Girl? Or is it right to train it as Woman? Wouldn't it be more effective for the generalization of deep learning if it were trained as about half Girl and half Woman? This method is called Label Softening, and label softening is also performed using Knowledge Distillation. I will introduce a study on regularization using these methods.

We'll review some easy and good papers worth reading!

The paper review will be continuously updated!

If you add a little noise to a picture of an elephant, it will look like an elephant to the human eye, but deep learning will predict it as a Koala. As the field of deceiving deep learning through noise has emerged, many related studies have appeared, and I would like to introduce a study that shows that adding just one noise can cause deep learning to mismatch almost all images.

It is known that the model structure of deep learning should be simple for simple problems and complex for complex problems. In relation to this, there is a paper that studied the relationship between the model size of deep learning, the number of learning epochs, and overfitting.

In the figure below, you can see that all four pictures are 'dogs'. However, they all have different styles. If CNN learns dogs in one domain, it cannot match dogs in other domains. This is because it assumes that the training data and test data are in the same distribution. Generalization to multiple domains is called Domain Generalization, and we will introduce research on this.

Q. Is a lot of mathematical knowledge required?

A. It is somewhat necessary for the MLP section, but you can still take the overall lecture without it.

Q. Do I need to know Python?

A. Yes, the class is conducted on the assumption that you have some knowledge of Python. However, we also cover PyTorch techniques for deep learning for about an hour.

Q. What kind of papers do you review?

A. I mainly review papers that are easy to read and highly contributing to deep learning. I will add a video review of the paper whenever I find a new good paper.

- Master's program in Industrial Engineering, Yonsei University

- DataScience/Deep Learning Research

- https://github.com/Justin-A

Who is this course right for?

If you want to learn deep learning

If you want to learn the basics of Neural Network thoroughly

If you want to know the basic theory of deep learning and the areas it is developing

Need to know before starting?

Basic knowledge of python

Basic knowledge of machine learning

8,243

Students

496

Reviews

136

Answers

4.4

Rating

20

Courses

학부에서는 통계학을 전공하고 산업공학(인공지능) 박사를 받고 여전히 공부중인 백수입니다.

수상

ㆍ 제6회 빅콘테스트 게임유저이탈 알고리즘 개발 / 엔씨소프트상(2018)

ㆍ 제5회 빅콘테스트 대출 연체자 예측 알고리즘개발 / 한국정보통신진흥협회장상(2017)

ㆍ 2016 날씨 빅데이터 콘테스트/ 기상산업 진흥원장상(2016)

ㆍ 제4회 빅콘테스트 보험사기 예측 알고리즘 개발 / 본선진출(2016)

ㆍ 제3회 빅콘테스트 야구 경기 예측 알고리즘 개발 / 미래창조과학부 장관상(2015)

* blog : https://bluediary8.tistory.com

주로 연구하는 분야는 데이터 사이언스, 강화학습, 딥러닝 입니다.

크롤링과 텍스트마이닝은 현재는 취미로 하고있습니다 :)

크롤링을 이용해서 인기있는 커뮤니티 글만 수집해서 보여주는 마롱이라는 앱을 개발하였고

전국의 맛집리스트와 블로그를 수집해서 맛집 추천 앱도 만들었었죠 :) (시원하게 말아먹..)

지금은 인공지능을 연구하는 박사과정생입니다.

All

43 lectures ∙ (11hr 41min)

Course Materials:

All

19 reviews

4.2

19 reviews

Reviews 7

∙

Average Rating 4.3

Reviews 1

∙

Average Rating 4.0

4

Although it is described as 'easy and fast', if you look closely at the lecture content, it is not easy. This is not a lecture that just skims over deep learning. Instead, it is a lecture that shows traces of efforts to provide information as thoroughly as possible. I would like to thank Coco for her efforts in preparing it.

Reviews 2

∙

Average Rating 3.0

Reviews 1

∙

Average Rating 5.0

Reviews 2

∙

Average Rating 5.0

Check out other courses by the instructor!

Explore other courses in the same field!

$63.80