인프런 커뮤니티 질문&답변

강의자료 A.003/U 설치 후 강제종료 시 발생하는 문제

작성

·

616

0

안녕하세요. 덕분에 강의 알차게 수강 중입니다.

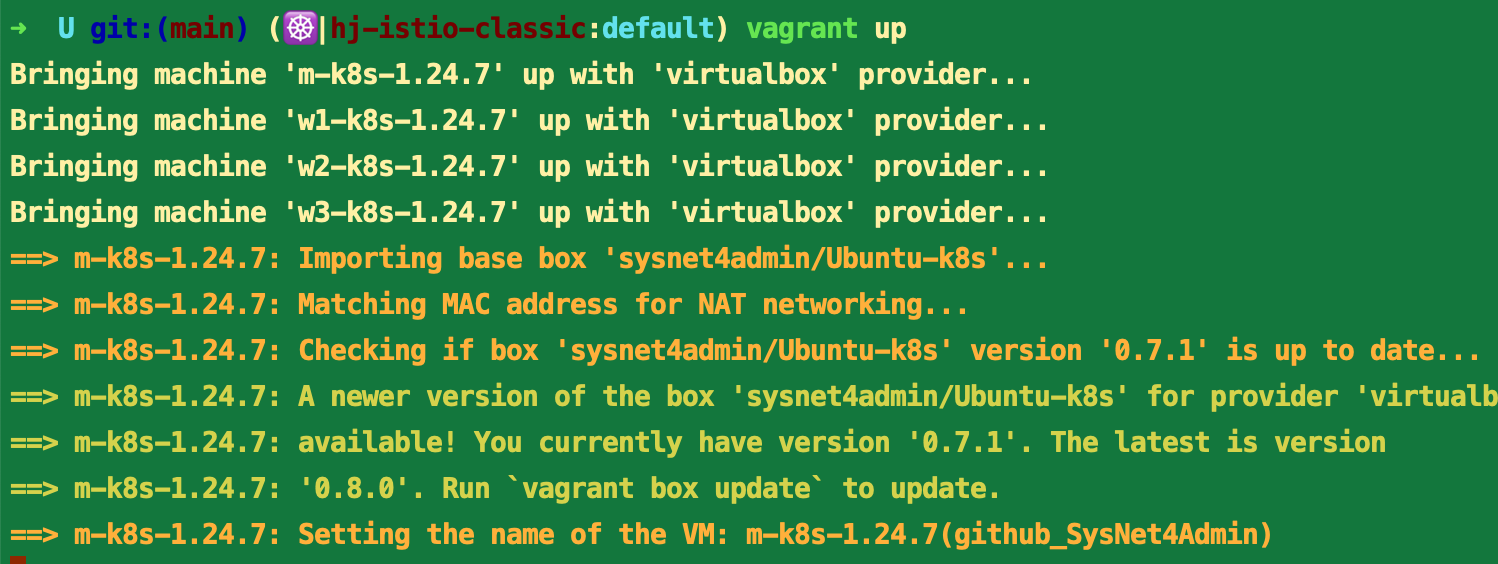

올려주신 강의 자료 중 A.003/U를 vagrant로 설치 후(1.24.7 버전..README에서는 1.24.8이라고 되어 있지만 vagrant 파일 내에는 1.24.7버전으로 되어 있어서 1.24.7버전으로 설치했습니다)

강제 종료가 발생한 후 다시 virtual box를 실행시켜서 노드를 확인해 보니 다음과 같은 에러가 발생했습니다.

root@m-k8s:~# k get nodes

E0427 14:58:18.486078 1922 memcache.go:265] couldn't get current server API group list: Get "https://192.168.1.10:6443/api?timeout=32s": dial cp 192.168.1.10:6443: connect: connection refused

E0427 14:58:18.486251 1922 memcache.go:265] couldn't get current server API group list: Get "https://192.168.1.10:6443/api?timeout=32s": dial cp 192.168.1.10:6443: connect: connection refused

E0427 14:58:18.487807 1922 memcache.go:265] couldn't get current server API group list: Get "https://192.168.1.10:6443/api?timeout=32s": dial cp 192.168.1.10:6443: connect: connection refused

E0427 14:58:18.489723 1922 memcache.go:265] couldn't get current server API group list: Get "https://192.168.1.10:6443/api?timeout=32s": dial cp 192.168.1.10:6443: connect: connection refused

E0427 14:58:18.491648 1922 memcache.go:265] couldn't get current server API group list: Get "https://192.168.1.10:6443/api?timeout=32s": dial cp 192.168.1.10:6443: connect: connection refused

The connection to the server 192.168.1.10:6443 was refused - did you specify the right host or port?혹시나 하여 vagrant up을 한 후 다시 재설치하여 실행한 후 또 종료 후 재실행하니 다시 똑같은 에러가 발생하는데요.

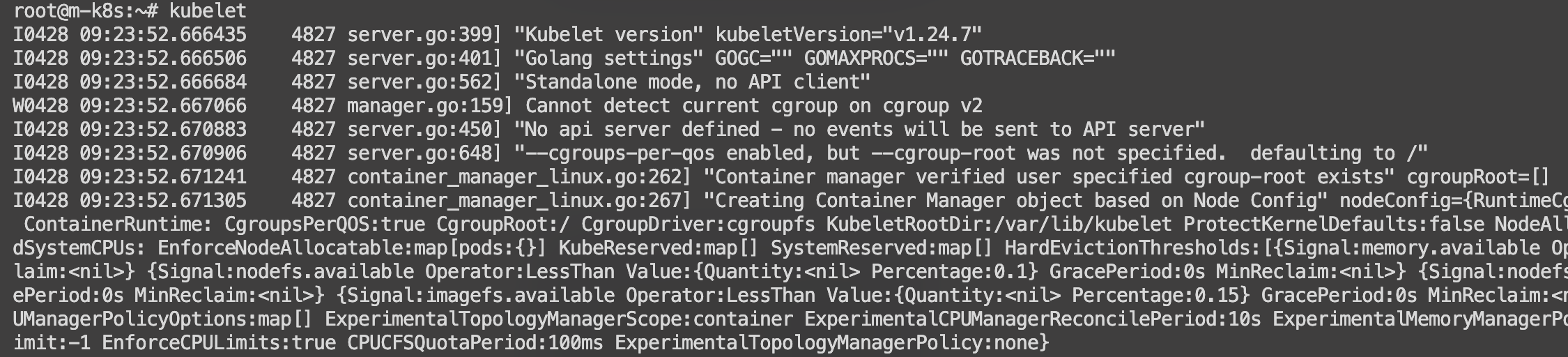

혹시나 하여 kubelet을 실행해 보니 다음과 같은 로그가 발생했습니다.

root@m-k8s:~/.kube# kubelet

I0427 15:09:00.641157 2469 server.go:415] "Kubelet version" kubeletVersion="v1.27.1"

I0427 15:09:00.641178 2469 server.go:417] "Golang settings" GOGC="" GOMAXPROCS="" GOTRACEBACK=""

I0427 15:09:00.641271 2469 server.go:578] "Standalone mode, no API client"

I0427 15:09:00.643456 2469 server.go:466] "No api server defined - no events will be sent to API server"

I0427 15:09:00.643466 2469 server.go:662] "--cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /"

I0427 15:09:00.643675 2469 container_manager_linux.go:266] "Container manager verified user specified cgroup-root exists" cgroupRoot=[]

I0427 15:09:00.643706 2469 container_manager_linux.go:271] "Creating Container Manager object based on Node Config" nodeConfig={RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName: KubeletOOMScoreAdj:-999 ContainerRuntime: CgroupsPerQOS:true CgroupRoot:/ CgroupDriver:cgroupfs KubeletRootDir:/var/lib/kubelet ProtectKernelDefaults:false NodeAllocatableConfig:{KubeReservedCgroupName: SystemReservedCgroupName: ReservedSystemCPUs: EnforceNodeAllocatable:map[pods:{}] KubeReserved:map[] SystemReserved:map[] HardEvictionThresholds:[]} QOSReserved:map[] CPUManagerPolicy:none CPUManagerPolicyOptions:map[] TopologyManagerScope:container CPUManagerReconcilePeriod:10s ExperimentalMemoryManagerPolicy:None ExperimentalMemoryManagerReservedMemory:[] PodPidsLimit:-1 EnforceCPULimits:true CPUCFSQuotaPeriod:100ms TopologyManagerPolicy:none ExperimentalTopologyManagerPolicyOptions:map[]}

I0427 15:09:00.643715 2469 topology_manager.go:136] "Creating topology manager with policy per scope" topologyPolicyName="none" topologyScopeName="container"

I0427 15:09:00.643722 2469 container_manager_linux.go:302] "Creating device plugin manager"

I0427 15:09:00.643742 2469 state_mem.go:36] "Initialized new in-memory state store"

I0427 15:09:00.649586 2469 kubelet.go:411] "Kubelet is running in standalone mode, will skip API server sync"

I0427 15:09:00.650341 2469 kuberuntime_manager.go:257] "Container runtime initialized" containerRuntime="containerd" version="1.6.20" apiVersion="v1"

I0427 15:09:00.650597 2469 volume_host.go:75] "KubeClient is nil. Skip initialization of CSIDriverLister"

W0427 15:09:00.652329 2469 csi_plugin.go:189] kubernetes.io/csi: kubeclient not set, assuming standalone kubelet

W0427 15:09:00.652338 2469 csi_plugin.go:266] Skipping CSINode initialization, kubelet running in standalone mode

I0427 15:09:00.652599 2469 server.go:1168] "Started kubelet"

I0427 15:09:00.652626 2469 kubelet.go:1548] "No API server defined - no node status update will be sent"

I0427 15:09:00.653540 2469 server.go:194] "Starting to listen read-only" address="0.0.0.0" port=10255

I0427 15:09:00.653722 2469 ratelimit.go:65] "Setting rate limiting for podresources endpoint" qps=100 burstTokens=10

I0427 15:09:00.653736 2469 server.go:162] "Starting to listen" address="0.0.0.0" port=10250

E0427 15:09:00.653787 2469 cri_stats_provider.go:455] "Failed to get the info of the filesystem with mountpoint" err="unable to find data in memory cache" mountpoint="/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs"

E0427 15:09:00.653807 2469 kubelet.go:1400] "Image garbage collection failed once. Stats initialization may not have completed yet" err="invalid capacity 0 on image filesystem"

I0427 15:09:00.654351 2469 fs_resource_analyzer.go:67] "Starting FS ResourceAnalyzer"

I0427 15:09:00.654429 2469 server.go:461] "Adding debug handlers to kubelet server"

I0427 15:09:00.654857 2469 volume_manager.go:284] "Starting Kubelet Volume Manager"

I0427 15:09:00.655355 2469 desired_state_of_world_populator.go:145] "Desired state populator starts to run"

I0427 15:09:00.671132 2469 cpu_manager.go:214] "Starting CPU manager" policy="none"

I0427 15:09:00.671158 2469 cpu_manager.go:215] "Reconciling" reconcilePeriod="10s"

I0427 15:09:00.671171 2469 state_mem.go:36] "Initialized new in-memory state store"

I0427 15:09:00.671471 2469 state_mem.go:88] "Updated default CPUSet" cpuSet=""

I0427 15:09:00.671492 2469 state_mem.go:96] "Updated CPUSet assignments" assignments=map[]

I0427 15:09:00.671500 2469 policy_none.go:49] "None policy: Start"

I0427 15:09:00.672503 2469 memory_manager.go:169] "Starting memorymanager" policy="None"

I0427 15:09:00.672520 2469 state_mem.go:35] "Initializing new in-memory state store"

I0427 15:09:00.672895 2469 state_mem.go:75] "Updated machine memory state"

I0427 15:09:00.676749 2469 kubelet_network_linux.go:63] "Initialized iptables rules." protocol=IPv4

I0427 15:09:00.677830 2469 kubelet_network_linux.go:63] "Initialized iptables rules." protocol=IPv6

I0427 15:09:00.677847 2469 status_manager.go:203] "Kubernetes client is nil, not starting status manager"

I0427 15:09:00.677867 2469 kubelet.go:2257] "Starting kubelet main sync loop"

E0427 15:09:00.677896 2469 kubelet.go:2281] "Skipping pod synchronization" err="[container runtime status check may not have completed yet, PLEG is not healthy: pleg has yet to be successful]"

I0427 15:09:00.706770 2469 manager.go:455] "Failed to read data from checkpoint" checkpoint="kubelet_internal_checkpoint" err="checkpoint is not found"

I0427 15:09:00.707970 2469 plugin_manager.go:118] "Starting Kubelet Plugin Manager"

I0427 15:09:00.718193 2469 reconciler_new.go:29] "Reconciler: start to sync state"

I0427 15:09:00.757274 2469 desired_state_of_world_populator.go:153] "Finished populating initial desired state of world"

I0427 15:09:00.780684 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="4f30c0e29a0f50f0ac88f0ede3349dc7330049aab9679fab780ce0509f5880d5"

I0427 15:09:00.780763 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="688af4ee6a0b5d1a70a23a6df6ab95aa4444dbc18051bd0c5ff5987f6fdc9f6c"

I0427 15:09:00.780897 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="70eb6a49bde6dfa88689e65d6e0ce7df0580d7695aa4f07b3c230ac0304dfad3"

I0427 15:09:00.780924 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="c71ce1abd0a094beb98683158c68aa42ef4dc86e2ab01b79265af907c03432e3"

I0427 15:09:00.780937 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="ab52d73f40d007573c50517a218fc897ae545d4e5088f1df32fcb93f0025dde0"

I0427 15:09:00.780949 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="7edc40acc8631594bd532b918db1337a823e323027d056c8e1cf1d524de69f65"

I0427 15:09:00.780961 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="5f3831d2e20022c990dff7bb346ce9619b45a34e80ee93b4b3d6a8cd34492357"

I0427 15:09:00.780973 2469 pod_container_deletor.go:80] "Container not found in pod's containers" containerID="c44a3606db9d1d371a33d5ecd54c094f2962fdf034ddf051c7e5a49b889abb0f"

I0427 15:09:00.819081 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"config-volume\" (UniqueName: \"kubernetes.io/configmap/04ba41e5-b5ea-491f-9dc4-3989094542ce-config-volume\") pod \"04ba41e5-b5ea-491f-9dc4-3989094542ce\" (UID: \"04ba41e5-b5ea-491f-9dc4-3989094542ce\") "

I0427 15:09:00.819093 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"kube-api-access-8kfmr\" (UniqueName: \"kubernetes.io/projected/04ba41e5-b5ea-491f-9dc4-3989094542ce-kube-api-access-8kfmr\") pod \"04ba41e5-b5ea-491f-9dc4-3989094542ce\" (UID: \"04ba41e5-b5ea-491f-9dc4-3989094542ce\") "

I0427 15:09:00.819099 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"config-volume\" (UniqueName: \"kubernetes.io/configmap/225235fa-89f9-4fc8-a190-9962ba532fb4-config-volume\") pod \"225235fa-89f9-4fc8-a190-9962ba532fb4\" (UID: \"225235fa-89f9-4fc8-a190-9962ba532fb4\") "

I0427 15:09:00.819108 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"kube-api-access-rvx4m\" (UniqueName: \"kubernetes.io/projected/225235fa-89f9-4fc8-a190-9962ba532fb4-kube-api-access-rvx4m\") pod \"225235fa-89f9-4fc8-a190-9962ba532fb4\" (UID: \"225235fa-89f9-4fc8-a190-9962ba532fb4\") "

I0427 15:09:00.819121 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"kube-api-access-br4wk\" (UniqueName: \"kubernetes.io/projected/b5e3a923-48f5-46f1-bd6f-7e9136a4c0bd-kube-api-access-br4wk\") pod \"b5e3a923-48f5-46f1-bd6f-7e9136a4c0bd\" (UID: \"b5e3a923-48f5-46f1-bd6f-7e9136a4c0bd\") "

I0427 15:09:00.819128 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"kube-proxy\" (UniqueName: \"kubernetes.io/configmap/f822a0ef-4459-400e-b73a-dfd9d8d2f614-kube-proxy\") pod \"f822a0ef-4459-400e-b73a-dfd9d8d2f614\" (UID: \"f822a0ef-4459-400e-b73a-dfd9d8d2f614\") "

I0427 15:09:00.819134 2469 reconciler_common.go:172] "operationExecutor.UnmountVolume started for volume \"kube-api-access-ctcvw\" (UniqueName: \"kubernetes.io/projected/f822a0ef-4459-400e-b73a-dfd9d8d2f614-kube-api-access-ctcvw\") pod \"f822a0ef-4459-400e-b73a-dfd9d8d2f614\" (UID: \"f822a0ef-4459-400e-b73a-dfd9d8d2f614\") "

W0427 15:09:00.820262 2469 empty_dir.go:525] Warning: Failed to clear quota on /var/lib/kubelet/pods/04ba41e5-b5ea-491f-9dc4-3989094542ce/volumes/kubernetes.io~configmap/config-volume: clearQuota called, but quotas disabled종료가 발생한 후 다시 실행하면 노드를 찾지 못하는 문제가 계속적으로 발생하는데, 매번 재설치 하는 것도 번거롭고, 이 상태에서 어떻게 하면 문제를 해결할 수 있을지 공식 문서를 찾아보고 검색을 해 봐도 잘 모르겠습니다.

1.24.8 changelog를 확인해 보면 노드의 endpoint를 찾지 못하는 문제가 있는 것 같기도 한데...잘 이해되지 않아 정확히 파악하기 어렵네요.

노드를 찾지 못하는 문제를 어떻게 해결하면 좋을지 답변 주시면 감사하겠습니다!

답변 1

0

안녕하세요

어떠한 이유인지는 모르겠는데, 현재 쿠버네티스 버전을 최신으로 진행한 것 같습니다. (최신)

README가 틀린 것은 매번 맞추고 있진 않아서요 --;;;

자주 변경을 하는지라...그런데 현재는 v1.24.7이 맞고....`rerepo`를 다시 하고 제대로 지운 다음에 다시 실행해 보시면 어떨까 싶습니다.

그리고 처음에 이러한 형태로 버전이 표시된게 맞나요? 아마 이 버전이 아닐꺼 같아서요.

네. 1.24.7 버전으로 진행한 게 맞습니다.

주신 A.003/U 내부 파일에서 cpu와 memory와 vb.name만 수정하고 나머지는 그대로 vagrant up 하여 사용했습니다.

혹시 위와 같은 상태에서 다시 노드를 인식할 수 있게 수정할 수는 없을까요? 간단하지 않은 문제인지요ㅠ

# -*- mode: ruby -*-

# vi: set ft=ruby :

## configuration variables ##

# max number of worker nodes

N = 3

# each of components to install

k8s_V = '1.24.7-00' # Kubernetes

docker_V = '5:20.10.20~3-0~ubuntu-jammy' # Docker

ctrd_V = '1.6.8-1' # Containerd

## /configuration variables ##

Vagrant.configure("2") do |config|

#=============#

# Master Node #

#=============#

config.vm.define "m-k8s-#{k8s_V[0..5]}" do |cfg|

cfg.vm.box = "sysnet4admin/Ubuntu-k8s"

cfg.vm.provider "virtualbox" do |vb|

vb.name = "m-k8s-#{k8s_V[0..5]}"

vb.cpus = 4

vb.memory = 16384

vb.customize ["modifyvm", :id, "--groups", "/k8s-U#{k8s_V[0..5]}-ctrd-#{ctrd_V[0..2]}"]

end

cfg.vm.host_name = "m-k8s"

cfg.vm.network "private_network", ip: "192.168.1.10"

cfg.vm.network "forwarded_port", guest: 22, host: 60010, auto_correct: true, id: "ssh"

cfg.vm.synced_folder "../data", "/vagrant", disabled: true

cfg.vm.provision "shell", path: "k8s_env_build.sh", args: N

cfg.vm.provision "shell", path: "k8s_pkg_cfg.sh", args: [ k8s_V, docker_V, ctrd_V, "M"]

cfg.vm.provision "shell", path: "master_node.sh"

end

#==============#

# Worker Nodes #

#==============#

(1..N).each do |i|

config.vm.define "w#{i}-k8s-#{k8s_V[0..5]}" do |cfg|

cfg.vm.box = "sysnet4admin/Ubuntu-k8s"

cfg.vm.provider "virtualbox" do |vb|

vb.name = "w#{i}-k8s-#{k8s_V[0..5]}"

vb.cpus = 4

vb.memory = 16384

vb.customize ["modifyvm", :id, "--groups", "/k8s-U#{k8s_V[0..5]}-ctrd-#{ctrd_V[0..2]}"]

end

cfg.vm.host_name = "w#{i}-k8s"

cfg.vm.network "private_network", ip: "192.168.1.10#{i}"

cfg.vm.network "forwarded_port", guest: 22, host: "6010#{i}", auto_correct: true, id: "ssh"

cfg.vm.synced_folder "../data", "/vagrant", disabled: true

cfg.vm.provision "shell", path: "k8s_env_build.sh", args: N

cfg.vm.provision "shell", path: "k8s_pkg_cfg.sh", args: [ k8s_V, docker_V, ctrd_V, "W" ]

cfg.vm.provision "shell", path: "work_nodes.sh"

end

end

end

그냥 사용할 때는 저도 문제가 없었고, 말씀드렸다시피 virtual box 강제 종료 이후에 저런 현상이 나타났습니다.

강사님은 종료 후 재시작 시에도 문제가 없으셨나요?

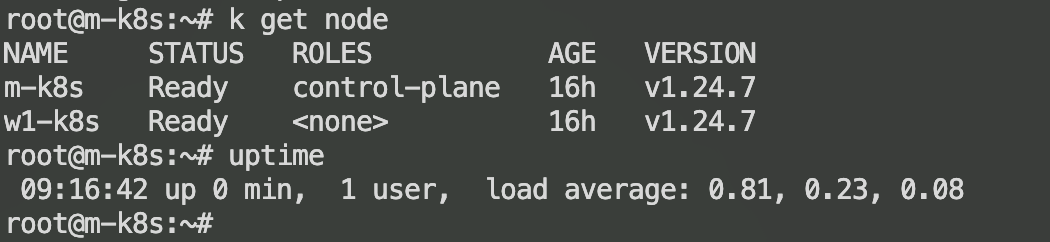

강제로 여러차례 껐다가 켜 봤는데요. 문제가 재현되지는 않는거 같습니다.

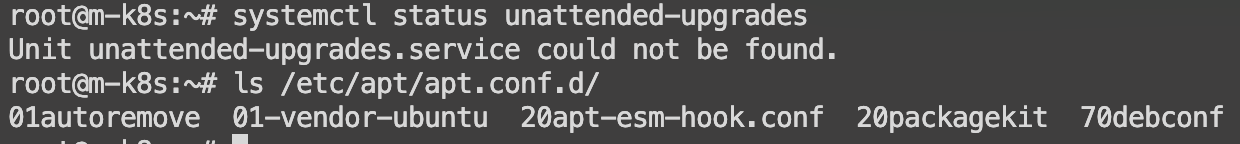

그리고 자동 업데이트도 현재 설정되어 있지 않습니다.

참고: 일반적으로 auto update disable 하는 법

https://linuxconfig.org/disable-automatic-updates-on-ubuntu-22-04-jammy-jellyfish-linux

그래서 지금 뭔가 잘못 되신거 같은데 kubelet이 최신이시거든요. 1.27.1 <<<

제가 그 원인을 파악하긴 어려울 것 같습니다.

ubuntu update나 upgrade를 하면서 kubelet이 업데이트 되었을 수도 있겠다는 생각이 듭니다(잘 모르겠지만..). 번거로우셨을텐데 이렇게 재현까지 해보시고..답변 감사드립니다.

정상 인거 같습니다...

확인을 다시 해보시면 좋으실 것 같습니다.