인프런 커뮤니티 질문&답변

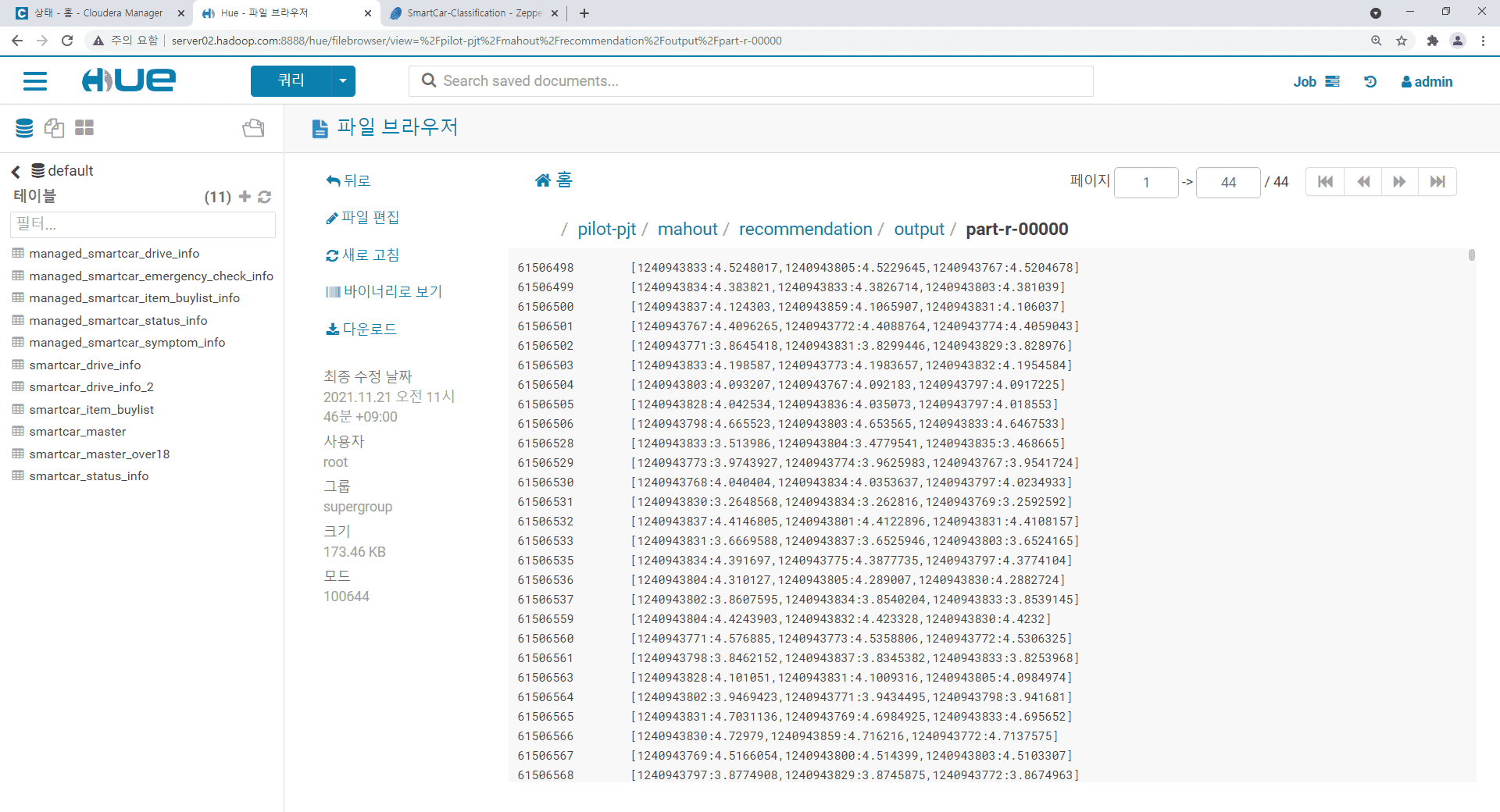

분석 실행 5단계 - 머하웃 추천 - 스마트카 차량용품 추천

해결된 질문

21.11.21 04:09 작성

·

206

1

[root@server02 pilot-pjt]# mahout recommenditembased -i /pilot-pjt/mahout/recommendation/input/item_buylist.txt -o /pilot-pjt/mahout/recommendation/output/ -s SIMILARITY_COCCURRENCE -n 3

Running on hadoop, using /usr/bin/hadoop and HADOOP_CONF_DIR=

MAHOUT-JOB: /home/pilot-pjt/mahout/mahout-examples-0.13.0-job.jar

WARNING: Use "yarn jar" to launch YARN applications.

21/11/21 03:30:22 INFO AbstractJob: Command line arguments: {--booleanData=[false], --endPhase=[2147483647], --input=[/pilot-pjt/mahout/recommendation/input/item_buylist.txt], --maxPrefsInItemSimilarity=[500], --maxPrefsPerUser=[10], --maxSimilaritiesPerItem=[100], --minPrefsPerUser=[1], --numRecommendations=[3], --output=[/pilot-pjt/mahout/recommendation/output/], --similarityClassname=[SIMILARITY_COCCURRENCE], --startPhase=[0], --tempDir=[temp]}

21/11/21 03:30:22 INFO AbstractJob: Command line arguments: {--booleanData=[false], --endPhase=[2147483647], --input=[/pilot-pjt/mahout/recommendation/input/item_buylist.txt], --minPrefsPerUser=[1], --output=[temp/preparePreferenceMatrix], --ratingShift=[0.0], --startPhase=[0], --tempDir=[temp]}

21/11/21 03:30:22 INFO deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

21/11/21 03:30:22 INFO deprecation: mapred.compress.map.output is deprecated. Instead, use mapreduce.map.output.compress

21/11/21 03:30:22 INFO deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

21/11/21 03:30:23 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:30:28 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0002

21/11/21 03:30:32 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:30:32 INFO JobSubmitter: number of splits:1

21/11/21 03:30:33 INFO JobSubmitter: Submitting tokens for job: job_1637424392789_0002

21/11/21 03:30:33 INFO JobSubmitter: Executing with tokens: []

21/11/21 03:30:34 INFO Configuration: resource-types.xml not found

21/11/21 03:30:34 INFO ResourceUtils: Unable to find 'resource-types.xml'.

21/11/21 03:30:34 INFO YarnClientImpl: Submitted application application_1637424392789_0002

21/11/21 03:30:34 INFO Job: The url to track the job: http://server01.hadoop.com:8088/proxy/application_1637424392789_0002/

21/11/21 03:30:34 INFO Job: Running job: job_1637424392789_0002

21/11/21 03:31:34 INFO Job: Job job_1637424392789_0002 running in uber mode : false

21/11/21 03:31:34 INFO Job: map 0% reduce 0%

21/11/21 03:32:18 INFO Job: map 100% reduce 0%

21/11/21 03:32:43 INFO Job: map 100% reduce 100%

21/11/21 03:32:44 INFO Job: Job job_1637424392789_0002 completed successfully

21/11/21 03:32:45 INFO Job: Counters: 54

File System Counters

FILE: Number of bytes read=269

FILE: Number of bytes written=442733

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2072067

HDFS: Number of bytes written=643

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=40610

Total time spent by all reduces in occupied slots (ms)=18665

Total time spent by all map tasks (ms)=40610

Total time spent by all reduce tasks (ms)=18665

Total vcore-milliseconds taken by all map tasks=40610

Total vcore-milliseconds taken by all reduce tasks=18665

Total megabyte-milliseconds taken by all map tasks=41584640

Total megabyte-milliseconds taken by all reduce tasks=19112960

Map-Reduce Framework

Map input records=94178

Map output records=94178

Map output bytes=941780

Map output materialized bytes=265

Input split bytes=151

Combine input records=94178

Combine output records=30

Reduce input groups=30

Reduce shuffle bytes=265

Reduce input records=30

Reduce output records=30

Spilled Records=60

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=475

CPU time spent (ms)=2570

Physical memory (bytes) snapshot=503918592

Virtual memory (bytes) snapshot=5090603008

Total committed heap usage (bytes)=365957120

Peak Map Physical memory (bytes)=366530560

Peak Map Virtual memory (bytes)=2539433984

Peak Reduce Physical memory (bytes)=137388032

Peak Reduce Virtual memory (bytes)=2551169024

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=2071916

File Output Format Counters

Bytes Written=643

21/11/21 03:32:45 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:32:45 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0003

21/11/21 03:32:47 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:32:48 INFO JobSubmitter: number of splits:1

21/11/21 03:32:49 INFO JobSubmitter: Submitting tokens for job: job_1637424392789_0003

21/11/21 03:32:49 INFO JobSubmitter: Executing with tokens: []

21/11/21 03:32:49 INFO YarnClientImpl: Submitted application application_1637424392789_0003

21/11/21 03:32:49 INFO Job: The url to track the job: http://server01.hadoop.com:8088/proxy/application_1637424392789_0003/

21/11/21 03:32:49 INFO Job: Running job: job_1637424392789_0003

21/11/21 03:33:27 INFO Job: Job job_1637424392789_0003 running in uber mode : false

21/11/21 03:33:27 INFO Job: map 0% reduce 0%

21/11/21 03:33:51 INFO Job: map 100% reduce 0%

21/11/21 03:34:08 INFO Job: map 100% reduce 100%

21/11/21 03:34:08 INFO Job: Job job_1637424392789_0003 completed successfully

21/11/21 03:34:08 INFO Job: Counters: 55

File System Counters

FILE: Number of bytes read=402933

FILE: Number of bytes written=1248777

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2072067

HDFS: Number of bytes written=522978

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=21247

Total time spent by all reduces in occupied slots (ms)=13637

Total time spent by all map tasks (ms)=21247

Total time spent by all reduce tasks (ms)=13637

Total vcore-milliseconds taken by all map tasks=21247

Total vcore-milliseconds taken by all reduce tasks=13637

Total megabyte-milliseconds taken by all map tasks=21756928

Total megabyte-milliseconds taken by all reduce tasks=13964288

Map-Reduce Framework

Map input records=94178

Map output records=94178

Map output bytes=1224314

Map output materialized bytes=402929

Input split bytes=151

Combine input records=0

Combine output records=0

Reduce input groups=2449

Reduce shuffle bytes=402929

Reduce input records=94178

Reduce output records=2449

Spilled Records=188356

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=298

CPU time spent (ms)=2660

Physical memory (bytes) snapshot=503132160

Virtual memory (bytes) snapshot=5092700160

Total committed heap usage (bytes)=365957120

Peak Map Physical memory (bytes)=372359168

Peak Map Virtual memory (bytes)=2539433984

Peak Reduce Physical memory (bytes)=130772992

Peak Reduce Virtual memory (bytes)=2553266176

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=2071916

File Output Format Counters

Bytes Written=522978

org.apache.mahout.cf.taste.hadoop.item.ToUserVectorsReducer$Counters

USERS=2449

21/11/21 03:34:08 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:34:08 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0004

21/11/21 03:34:10 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:34:10 INFO JobSubmitter: number of splits:1

21/11/21 03:34:10 INFO JobSubmitter: Submitting tokens for job: job_1637424392789_0004

21/11/21 03:34:10 INFO JobSubmitter: Executing with tokens: []

21/11/21 03:34:10 INFO YarnClientImpl: Submitted application application_1637424392789_0004

21/11/21 03:34:10 INFO Job: The url to track the job: http://server01.hadoop.com:8088/proxy/application_1637424392789_0004/

21/11/21 03:34:10 INFO Job: Running job: job_1637424392789_0004

21/11/21 03:34:24 INFO Job: Job job_1637424392789_0004 running in uber mode : false

21/11/21 03:34:24 INFO Job: map 0% reduce 0%

21/11/21 03:34:33 INFO Job: Task Id : attempt_1637424392789_0004_m_000000_0, Status : FAILED

[2021-11-21 03:34:31.664]Container killed on request. Exit code is 137

[2021-11-21 03:34:31.666]Container exited with a non-zero exit code 137.

[2021-11-21 03:34:31.675]Killed by external signal

21/11/21 03:34:43 INFO Job: map 100% reduce 0%

21/11/21 03:34:56 INFO Job: map 100% reduce 100%

21/11/21 03:34:57 INFO Job: Job job_1637424392789_0004 completed successfully

21/11/21 03:34:57 INFO Job: Counters: 56

File System Counters

FILE: Number of bytes read=250272

FILE: Number of bytes written=942767

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=523138

HDFS: Number of bytes written=424085

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Failed map tasks=1

Launched map tasks=2

Launched reduce tasks=1

Other local map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=14569

Total time spent by all reduces in occupied slots (ms)=9859

Total time spent by all map tasks (ms)=14569

Total time spent by all reduce tasks (ms)=9859

Total vcore-milliseconds taken by all map tasks=14569

Total vcore-milliseconds taken by all reduce tasks=9859

Total megabyte-milliseconds taken by all map tasks=14918656

Total megabyte-milliseconds taken by all reduce tasks=10095616

Map-Reduce Framework

Map input records=2449

Map output records=52916

Map output bytes=1005404

Map output materialized bytes=250274

Input split bytes=160

Combine input records=52916

Combine output records=30

Reduce input groups=30

Reduce shuffle bytes=250274

Reduce input records=30

Reduce output records=30

Spilled Records=60

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=227

CPU time spent (ms)=2000

Physical memory (bytes) snapshot=524152832

Virtual memory (bytes) snapshot=5090603008

Total committed heap usage (bytes)=365957120

Peak Map Physical memory (bytes)=385503232

Peak Map Virtual memory (bytes)=2539433984

Peak Reduce Physical memory (bytes)=138649600

Peak Reduce Virtual memory (bytes)=2551169024

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=522978

File Output Format Counters

Bytes Written=424085

21/11/21 03:34:58 INFO AbstractJob: Command line arguments: {--endPhase=[2147483647], --excludeSelfSimilarity=[true], --input=[temp/preparePreferenceMatrix/ratingMatrix], --maxObservationsPerColumn=[500], --maxObservationsPerRow=[500], --maxSimilaritiesPerRow=[100], --numberOfColumns=[2449], --output=[temp/similarityMatrix], --randomSeed=[-9223372036854775808], --similarityClassname=[SIMILARITY_COCCURRENCE], --startPhase=[0], --tempDir=[temp], --threshold=[4.9E-324]}

21/11/21 03:34:58 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:34:58 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0005

21/11/21 03:35:00 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:35:00 INFO JobSubmitter: number of splits:1

21/11/21 03:35:00 INFO JobSubmitter: Submitting tokens for job: job_1637424392789_0005

21/11/21 03:35:00 INFO JobSubmitter: Executing with tokens: []

21/11/21 03:35:00 INFO YarnClientImpl: Submitted application application_1637424392789_0005

21/11/21 03:35:00 INFO Job: The url to track the job: http://server01.hadoop.com:8088/proxy/application_1637424392789_0005/

21/11/21 03:35:00 INFO Job: Running job: job_1637424392789_0005

21/11/21 03:35:19 INFO Job: Job job_1637424392789_0005 running in uber mode : false

21/11/21 03:35:19 INFO Job: map 0% reduce 0%

21/11/21 03:35:32 INFO Job: map 100% reduce 0%

21/11/21 03:35:41 INFO Job: map 100% reduce 100%

21/11/21 03:35:42 INFO Job: Job job_1637424392789_0005 completed successfully

21/11/21 03:35:42 INFO Job: Counters: 54

File System Counters

FILE: Number of bytes read=14503

FILE: Number of bytes written=471767

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=424246

HDFS: Number of bytes written=29494

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=9709

Total time spent by all reduces in occupied slots (ms)=6592

Total time spent by all map tasks (ms)=9709

Total time spent by all reduce tasks (ms)=6592

Total vcore-milliseconds taken by all map tasks=9709

Total vcore-milliseconds taken by all reduce tasks=6592

Total megabyte-milliseconds taken by all map tasks=9942016

Total megabyte-milliseconds taken by all reduce tasks=6750208

Map-Reduce Framework

Map input records=30

Map output records=1

Map output bytes=29396

Map output materialized bytes=14499

Input split bytes=161

Combine input records=1

Combine output records=1

Reduce input groups=1

Reduce shuffle bytes=14499

Reduce input records=1

Reduce output records=0

Spilled Records=2

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=276

CPU time spent (ms)=1520

Physical memory (bytes) snapshot=537358336

Virtual memory (bytes) snapshot=5092700160

Total committed heap usage (bytes)=365957120

Peak Map Physical memory (bytes)=398348288

Peak Map Virtual memory (bytes)=2539433984

Peak Reduce Physical memory (bytes)=139010048

Peak Reduce Virtual memory (bytes)=2553266176

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=424085

File Output Format Counters

Bytes Written=98

21/11/21 03:35:42 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:35:42 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0006

21/11/21 03:35:43 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:35:43 INFO JobSubmitter: number of splits:1

21/11/21 03:35:43 INFO JobSubmitter: Submitting tokens for job: job_1637424392789_0006

21/11/21 03:35:43 INFO JobSubmitter: Executing with tokens: []

21/11/21 03:35:43 INFO YarnClientImpl: Submitted application application_1637424392789_0006

21/11/21 03:35:43 INFO Job: The url to track the job: http://server01.hadoop.com:8088/proxy/application_1637424392789_0006/

21/11/21 03:35:43 INFO Job: Running job: job_1637424392789_0006

21/11/21 03:35:56 INFO Job: Job job_1637424392789_0006 running in uber mode : false

21/11/21 03:35:56 INFO Job: map 0% reduce 0%

21/11/21 03:36:10 INFO Job: Task Id : attempt_1637424392789_0006_m_000000_0, Status : FAILED

Error: java.lang.IllegalStateException: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:30)

at org.apache.mahout.math.hadoop.similarity.cooccurrence.RowSimilarityJob$VectorNormMapper.setup(RowSimilarityJob.java:270)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:143)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:799)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:28)

... 9 more

21/11/21 03:36:17 INFO Job: Task Id : attempt_1637424392789_0006_m_000000_1, Status : FAILED

Error: java.lang.IllegalStateException: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:30)

at org.apache.mahout.math.hadoop.similarity.cooccurrence.RowSimilarityJob$VectorNormMapper.setup(RowSimilarityJob.java:270)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:143)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:799)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:28)

... 9 more

21/11/21 03:36:24 INFO Job: Task Id : attempt_1637424392789_0006_m_000000_2, Status : FAILED

Error: java.lang.IllegalStateException: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:30)

at org.apache.mahout.math.hadoop.similarity.cooccurrence.RowSimilarityJob$VectorNormMapper.setup(RowSimilarityJob.java:270)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:143)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:799)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.ClassNotFoundException: SIMILARITY_COCCURRENCE

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.mahout.common.ClassUtils.instantiateAs(ClassUtils.java:28)

... 9 more

21/11/21 03:36:31 INFO Job: map 100% reduce 100%

21/11/21 03:36:32 INFO Job: Job job_1637424392789_0006 failed with state FAILED due to: Task failed task_1637424392789_0006_m_000000

Job failed as tasks failed. failedMaps:1 failedReduces:0 killedMaps:0 killedReduces: 0

21/11/21 03:36:33 INFO Job: Counters: 10

Job Counters

Failed map tasks=4

Killed reduce tasks=1

Launched map tasks=4

Other local map tasks=3

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=27630

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=27630

Total vcore-milliseconds taken by all map tasks=27630

Total megabyte-milliseconds taken by all map tasks=28293120

21/11/21 03:36:33 INFO RMProxy: Connecting to ResourceManager at server01.hadoop.com/192.168.56.101:8032

21/11/21 03:36:33 INFO JobResourceUploader: Disabling Erasure Coding for path: /user/root/.staging/job_1637424392789_0007

21/11/21 03:36:34 INFO FileInputFormat: Total input files to process : 1

21/11/21 03:36:34 INFO JobSubmitter: Cleaning up the staging area /user/root/.staging/job_1637424392789_0007

Exception in thread "main" org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://server01.hadoop.com:8020/user/root/temp/similarityMatrix

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.singleThreadedListStatus(FileInputFormat.java:330)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:272)

at org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat.listStatus(SequenceFileInputFormat.java:59)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.getSplits(FileInputFormat.java:394)

at org.apache.hadoop.mapreduce.lib.input.DelegatingInputFormat.getSplits(DelegatingInputFormat.java:115)

at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:310)

at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:327)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:200)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1570)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1567)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1567)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1588)

at org.apache.mahout.cf.taste.hadoop.item.RecommenderJob.run(RecommenderJob.java:249)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.mahout.cf.taste.hadoop.item.RecommenderJob.main(RecommenderJob.java:335)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.ProgramDriver$ProgramDescription.invoke(ProgramDriver.java:71)

at org.apache.hadoop.util.ProgramDriver.run(ProgramDriver.java:144)

at org.apache.hadoop.util.ProgramDriver.driver(ProgramDriver.java:152)

at org.apache.mahout.driver.MahoutDriver.main(MahoutDriver.java:195)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

[root@server02 pilot-pjt]#

답변 2

0

2021. 11. 21. 10:05

안녕하세요! 빅디 입니다.

파일럿 프로젝트 마무리가 얼마 남지 않은것 같습니다. 조금만더 화이팅 해주세요!

실행하신 문제의 원인은 머하웃 명령중 유사도 옵션에서 오타가 있어 보입니다.

[root@server02 pilot-pjt]# mahout recommenditembased -i /pilot-pjt/mahout/recommendation/input/item_buylist.txt -o /pilot-pjt/mahout/recommendation/output/ -s SIMILARITY_COCCURRENCE -n 3

해당 부분의 정확한 명령을 SIMILARITY_COOCCURRENCE 로 입력후 실행해 주세요~

- 빅디 드림