인프런 커뮤니티 질문&답변

ch1. controlplan_node.sh 실행 시 에러가 뜹니다

작성

·

123

·

수정됨

0

질문 답변을 제공하지만, 강의 비용에는 Q&A는 포함되어 있지 않습니다. 다만 실습이 안되거나, 잘못된 내용의 경우는 알려주시면 가능한 빠르게 조치하겠습니다!

[질문 전 답변]

1. 강의에서 다룬 내용과 관련된 질문인가요? 예

2. 인프런의 질문 게시판과 자주 하는 질문에 없는 내용인가요? 예

3. 질문 잘하기 법을 읽어보셨나요? 예

(https://www.inflearn.com/blogs/1719)

4. 잠깐! 인프런 서비스 운영 관련 문의는 1:1 문의하기를 이용해주세요.

5. vagrant up 에서 발생하는 문제는 주로 호스트 시스템(Windows, MacOS)과 연관된 다양한 조건에 의해 발생합니다. 따라서 이를 모두 제가 파악할 수 없어서 해결이 어렵습니다. vagrant up으로 진행이 어렵다면 제공해 드리는 가상 머신(VM) 이미지를 import해서 진행하시기 바랍니다.

(https://www.inflearn.com/questions/992407/comment/281901)

[질문 하기]

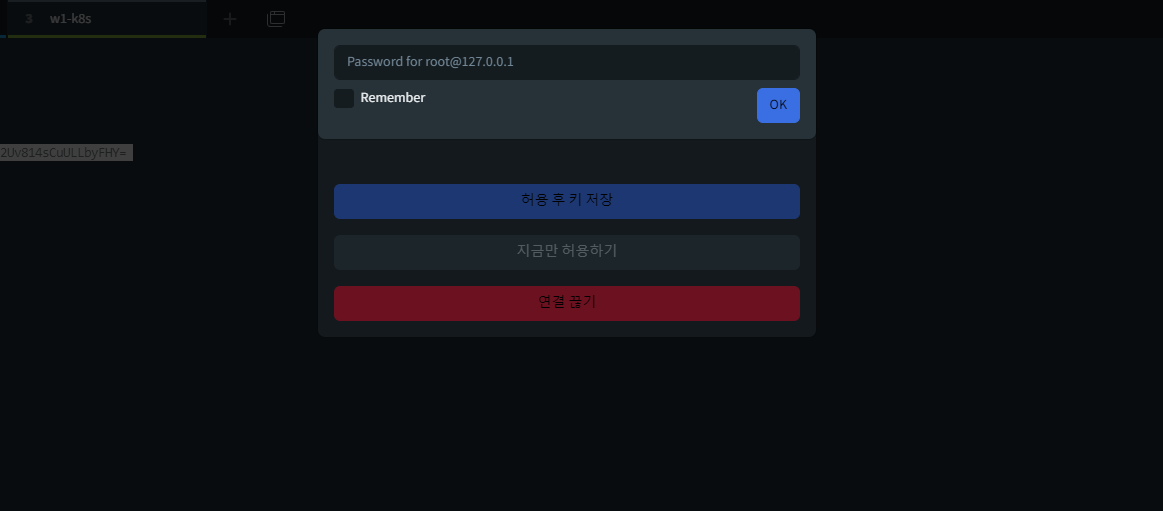

tabby로 노드 접속 시 pass워드 입력창이 떠 질문게시판 보고 비밀번호 입력해 들어갔고,

그 후 tabby-v1.0.207/config.yaml 도 다시 한번 cp했습니다.

PS C:\Users\sua\sua-study\k8s-edu\_Lecture_k8s_learning.kit\ch1\1.5\tabby-v1.0.207> cp ./config.yaml $env:APPDATA/tabby/

PS C:\Users\sua\sua-study\k8s-edu\_Lecture_k8s_learning.kit\ch1\1.5\tabby-v1.0.207> $env:APPDATA

C:\Users\sua\AppData\Roaming

PS C:\Users\sua\sua-study\k8s-edu\_Lecture_k8s_learning.kit\ch1\1.5\tabby-v1.0.207> cd C:\Users\sua\AppData\Roaming\tabby

PS C:\Users\sua\AppData\Roaming\tabby> ls

디렉터리: C:\Users\sua\AppData\Roaming\tabby

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 2024-11-09 오후 11:20 blob_storage

d----- 2024-11-09 오후 11:20 Code Cache

d----- 2024-11-09 오후 11:30 Crashpad

d----- 2024-11-09 오후 11:20 DawnCache

d----- 2024-11-09 오후 11:20 Dictionaries

d----- 2024-11-09 오후 11:20 GPUCache

d----- 2024-11-09 오후 11:20 Local Storage

d----- 2024-11-09 오후 11:30 Network

d----- 2024-11-09 오후 11:20 plugins

d----- 2024-11-09 오후 11:20 sentry

-a---- 2024-11-09 오후 11:20 36 .updaterId

-a---- 2024-11-09 오후 12:17 9408 config.yaml

-a---- 2024-11-09 오후 11:23 9377 config.yaml.backup

-a---- 2024-11-09 오후 11:20 434 Local State

-a---- 2024-11-09 오후 11:20 0 lockfile

-a---- 2024-11-09 오후 11:27 5190 log.txt

-a---- 2024-11-09 오후 11:20 57 Preferences

-a---- 2024-11-09 오후 11:20 2 window.json

컨트롤 플레인 노드에 접속해

controlpalne_node.sh을 실행하니 계속해서 no such file, directory, unable to load certificate 에러가 뜹니다.

root@cp-k8s:~/_Lecture_k8s_learning.kit.git/ch1/1.5# ./controlplane_node.sh

I1109 23:46:22.735630 2906 version.go:256] remote version is much newer: v1.31.2; falling back to: stable-1.30

[init] Using Kubernetes version: v1.30.6

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with --ignore-preflight-errors=...

To see the stack trace of this error execute with --v=5 or higher

cp: cannot stat '/etc/kubernetes/admin.conf': No such file or directory

chown: cannot access '/root/.kube/config': No such file or directory

error: error validating "https://raw.githubusercontent.com/sysnet4admin/IaC/main/k8s/CNI/172.16_net_calico_v3.26.0.yaml": error validating data: failed to download openapi: Get "http://localhost:8080/openapi/v2?timeout=32s": dial tcp 127.0.0.1:8080: connect: connectio

n refused; if you choose to ignore these errors, turn validation off with --validate=false

fatal: destination path '_Lecture_k8s_starter.kit' already exists and is not an empty directory.

mv: cannot stat '/home/vagrant/_Lecture_k8s_starter.kit': No such file or directory

find: ‘/root/_Lecture_k8s_starter.kit’: No such file or directory

Cloning into '/tmp/update-kube-cert'...

remote: Enumerating objects: 166, done.

remote: Counting objects: 100% (54/54), done.

remote: Compressing objects: 100% (45/45), done.

remote: Total 166 (delta 18), reused 20 (delta 8), pack-reused 112 (from 1)

Receiving objects: 100% (166/166), 63.56 KiB | 1.63 MiB/s, done.

Resolving deltas: 100% (81/81), done.

CERTIFICATE EXPIRES

grep: /etc/kubernetes/controller-manager.conf: No such file or directory

Could not read certificate from /dev/fd/63

Unable to load certificate

/etc/kubernetes/controller-manager.config

grep: /etc/kubernetes/scheduler.conf: No such file or directory

Could not read certificate from /dev/fd/63

Unable to load certificate

/etc/kubernetes/scheduler.config

grep: /etc/kubernetes/admin.conf: No such file or directory

Could not read certificate from /dev/fd/63

Unable to load certificate

/etc/kubernetes/admin.config

Could not open file or uri for loading certificate from /etc/kubernetes/pki/ca.crt

40E755C3AE7F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40E755C3AE7F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/ca.crt)

Unable to load certificate

/etc/kubernetes/pki/ca.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/apiserver.crt

40676913F77F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40676913F77F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/apiserver.crt)

Unable to load certificate

/etc/kubernetes/pki/apiserver.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/apiserver-kubelet-client.crt

40A791BE7A7F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40A791BE7A7F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/apiserver-kubelet-client.crt)

Unable to load certificate

/etc/kubernetes/pki/apiserver-kubelet-client.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/front-proxy-ca.crt

40E7648A397F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40E7648A397F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/front-proxy-ca.crt)

Unable to load certificate

/etc/kubernetes/pki/front-proxy-ca.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/front-proxy-client.crt

40D71C6F6E7F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40D71C6F6E7F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/front-proxy-client.crt)

Unable to load certificate

/etc/kubernetes/pki/front-proxy-client.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/etcd/ca.crt

40276AA0EE7F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40276AA0EE7F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/etcd/ca.crt)

Unable to load certificate

/etc/kubernetes/pki/etcd/ca.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/etcd/server.crt

40479185CE7F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40479185CE7F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/etcd/server.crt)

Unable to load certificate

/etc/kubernetes/pki/etcd/server.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/etcd/peer.crt

4037467AD47F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

4037467AD47F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/etcd/peer.crt)

Unable to load certificate

/etc/kubernetes/pki/etcd/peer.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/etcd/healthcheck-client.crt

40F739C5117F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40F739C5117F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/etcd/healthcheck-client.crt)

Unable to load certificate

/etc/kubernetes/pki/etcd/healthcheck-client.crt

Could not open file or uri for loading certificate from /etc/kubernetes/pki/apiserver-etcd-client.crt

40474EDD807F0000:error:16000069:STORE routines:ossl_store_get0_loader_int:unregistered scheme:../crypto/store/store_register.c:237:scheme=file

40474EDD807F0000:error:80000002:system library:file_open:No such file or directory:../providers/implementations/storemgmt/file_store.c:267:calling stat(/etc/kubernetes/pki/apiserver-etcd-client.crt)

Unable to load certificate

/etc/kubernetes/pki/apiserver-etcd-client.crt

[2024-11-09T23:46:25.77+0900][WARNING] does not backup, /etc/kubernetes.old-20241109 already exists

[2024-11-09T23:46:25.78+0900][INFO] updating...

Wait 30 seconds for restarting the Control-Plane Node...

추가 설정이 더 필요할까요,,,?ㅜ

답변 3

0

[ 호스트 ]

[ cp-k8s ]

root@cp-k8s:~# cd _Lecture_k8s_learning.kit.git/ch1/1.5

root@cp-k8s:~/_Lecture_k8s_learning.kit.git/ch1/1.5# ll

total 44

drwxr-xr-x 5 root root 4096 Nov 11 06:30 ./

drwxr-xr-x 3 root root 4096 Nov 11 06:30 ../

-rw-r--r-- 1 root root 1656 Nov 11 06:30 .cmd

-rwxr-xr-x 1 root root 1822 Nov 11 06:30 controlplane_node.sh*

-rwxr-xr-x 1 root root 1456 Nov 11 06:30 k8s_env_build.sh*

-rwxr-xr-x 1 root root 1045 Nov 11 06:30 k8s_pkg_cfg.sh*

drwxr-xr-x 2 root root 4096 Nov 11 06:30 tabby-v1.0.207/

-rw-r--r-- 1 root root 2365 Nov 11 06:30 Vagrantfile

drwxr-xr-x 2 root root 4096 Nov 11 06:30 vagrant-v2.4.1/

drwxr-xr-x 2 root root 4096 Nov 11 06:30 virtualbox-v7.0.18/

-rwxr-xr-x 1 root root 177 Nov 11 06:30 worker_nodes.sh*

root@cp-k8s:~/_Lecture_k8s_learning.kit.git/ch1/1.5# ./controlplane_node.sh

I1111 06:31:51.974839 4697 version.go:256] remote version is much newer: v1.31.2; falling back to: stable-1.30

[init] Using Kubernetes version: v1.30.6

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W1111 06:31:53.165929 4697 checks.go:844] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm.It is recommended to use "registry.k8s.io/pause:3.9" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [cp-k8s kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [cp-k8s localhost] and IPs [192.168.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [cp-k8s localhost] and IPs [192.168.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests"

[kubelet-check] Waiting for a healthy kubelet. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 501.280027ms

[api-check] Waiting for a healthy API server. This can take up to 4m0s

[api-check] The API server is healthy after 7.501705997s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node cp-k8s as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node cp-k8s as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 123456.1234567890123456

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.10:6443 --token 123456.1234567890123456 \

--discovery-token-ca-cert-hash sha256:695121635b0c28de725d5f4c5436e8d34740b0532161def178bd06329ecf6261

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

Cloning into '_Lecture_k8s_starter.kit'...

remote: Enumerating objects: 872, done.

remote: Counting objects: 100% (96/96), done.

remote: Compressing objects: 100% (74/74), done.

remote: Total 872 (delta 40), reused 56 (delta 19), pack-reused 776 (from 1)

Receiving objects: 100% (872/872), 164.93 KiB | 5.32 MiB/s, done.

Resolving deltas: 100% (387/387), done.

mv: cannot stat '/home/vagrant/_Lecture_k8s_starter.kit': No such file or directory

find: ‘/root/_Lecture_k8s_starter.kit’: No such file or directory

Cloning into '/tmp/update-kube-cert'...

remote: Enumerating objects: 166, done.

remote: Counting objects: 100% (54/54), done.

remote: Compressing objects: 100% (45/45), done.

remote: Total 166 (delta 18), reused 20 (delta 8), pack-reused 112 (from 1)

Receiving objects: 100% (166/166), 63.56 KiB | 4.54 MiB/s, done.

Resolving deltas: 100% (81/81), done.

CERTIFICATE EXPIRES

/etc/kubernetes/controller-manager.config Nov 10 21:33:56 2025 GMT

/etc/kubernetes/scheduler.config Nov 10 21:33:56 2025 GMT

/etc/kubernetes/admin.config Nov 10 21:33:56 2025 GMT

/etc/kubernetes/super-admin.config Nov 10 21:33:56 2025 GMT

/etc/kubernetes/pki/ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/apiserver.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/apiserver-kubelet-client.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/front-proxy-ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/front-proxy-client.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/etcd/ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/etcd/server.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/etcd/peer.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/etcd/healthcheck-client.crt Nov 10 21:33:55 2025 GMT

/etc/kubernetes/pki/apiserver-etcd-client.crt Nov 10 21:33:55 2025 GMT

[2024-11-11T06:34:09.05+0900][INFO] backup /etc/kubernetes to /etc/kubernetes.old-20241111

[2024-11-11T06:34:09.06+0900][INFO] updating...

[2024-11-11T06:34:09.07+0900][INFO] updated /etc/kubernetes/pki/etcd/server.conf

[2024-11-11T06:34:09.08+0900][INFO] updated /etc/kubernetes/pki/etcd/peer.conf

[2024-11-11T06:34:09.09+0900][INFO] updated /etc/kubernetes/pki/etcd/healthcheck-client.conf

[2024-11-11T06:34:09.11+0900][INFO] updated /etc/kubernetes/pki/apiserver-etcd-client.conf

[2024-11-11T06:34:09.25+0900][INFO] restarted etcd with containerd

[2024-11-11T06:34:09.27+0900][INFO] updated /etc/kubernetes/pki/apiserver.crt

[2024-11-11T06:34:09.28+0900][INFO] updated /etc/kubernetes/pki/apiserver-kubelet-client.crt

[2024-11-11T06:34:09.29+0900][INFO] updated /etc/kubernetes/controller-manager.conf

[2024-11-11T06:34:09.31+0900][INFO] updated /etc/kubernetes/scheduler.conf

[2024-11-11T06:34:09.32+0900][INFO] updated /etc/kubernetes/admin.conf

[2024-11-11T06:34:09.32+0900][INFO] backup /root/.kube/config to /root/.kube/config.old-20241111

[2024-11-11T06:34:09.32+0900][INFO] copy the admin.conf to /root/.kube/config

[2024-11-11T06:34:09.32+0900][INFO] does not need to update kubelet.conf

[2024-11-11T06:34:09.34+0900][INFO] updated /etc/kubernetes/super-admin.conf

[2024-11-11T06:34:09.35+0900][INFO] updated /etc/kubernetes/pki/front-proxy-client.crt

[2024-11-11T06:34:09.52+0900][INFO] restarted apiserver with containerd

[2024-11-11T06:34:09.61+0900][INFO] restarted controller-manager with containerd

[2024-11-11T06:34:09.74+0900][INFO] restarted scheduler with containerd

[2024-11-11T06:34:09.84+0900][INFO] restarted kubelet

[2024-11-11T06:34:09.85+0900][INFO] done!!!

CERTIFICATE EXPIRES

/etc/kubernetes/controller-manager.config Nov 8 21:34:09 2034 GMT

/etc/kubernetes/scheduler.config Nov 8 21:34:09 2034 GMT

/etc/kubernetes/admin.config Nov 8 21:34:09 2034 GMT

/etc/kubernetes/super-admin.config Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/apiserver.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/apiserver-kubelet-client.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/front-proxy-ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/front-proxy-client.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/etcd/ca.crt Nov 8 21:33:55 2034 GMT

/etc/kubernetes/pki/etcd/server.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/etcd/peer.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/etcd/healthcheck-client.crt Nov 8 21:34:09 2034 GMT

/etc/kubernetes/pki/apiserver-etcd-client.crt Nov 8 21:34:09 2034 GMT

Wait 30 seconds for restarting the Control-Plane Node...

0

안녕하세요

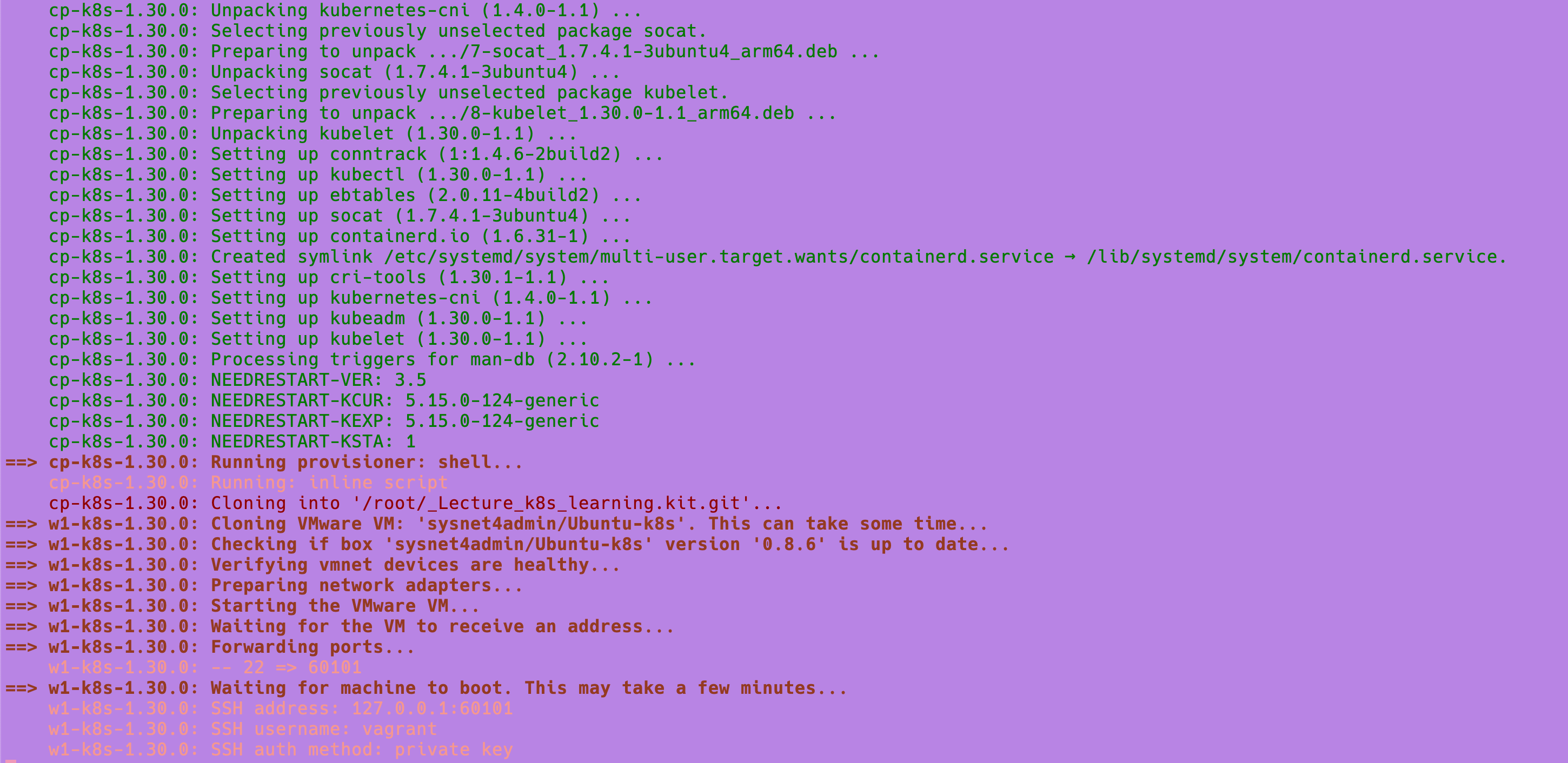

일단 문제를 출발 점은...

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1위의 부분 같은데요.

아침에 동작을 확인해 봤는데 문제가 없어서요. (아.새벽인가..)

왜 1로 세팅이 안되었는가를 확인해 봐야 할 것 같습니다.

혹시 괜찮으시다면

1.vagrant box update (Vagranfile이 있는 곳에서)

2.코드 새로 내려받기 (https://github.com/sysnet4admin/_Lecture_k8s_learning.kit)

3.호스트에서 여기 접속되는지 확인 (https://raw.githubusercontent.com/sysnet4admin/IaC/main/k8s/CNI/172.16_net_calico_v3.26.0.yaml)

4.문제발생시 cp-k8s에 접속해서 (https://raw.githubusercontent.com/sysnet4admin/IaC/main/k8s/CNI/172.16_net_calico_v3.26.0.yaml 접속되는지 확인. curl로 확인하시면 됩니다.)

을 부탁드려도 될까요?

참고로 Tabby는 클라이언트 접속 프로그램이라 위의 문제가 관계가 없으셔서 진행하지 않으셔도 될 것 같습니다. cp-k8s를 접속하시려는거라면 하시는게 맞고요.

위의 내용을 해보시고 적어주시면 다시 피드백 드리겠습니다.

0

안녕하세요, tndk1966님. 인프런 AI 인턴입니다.

제공해주신 오류 내용을 보면 쿠버네티스 설정 파일 및 인증서 파일들을 찾지 못하면서 발생하는 문제로 보입니다. 이 상황은 주로 초기 설정이나 스크립트 실패로 인해 기본적인 환경 구성이 제대로 이루어지지 않았을 때 발생할 수 있습니다.

다음은 문제를 해결하기 위한 몇 가지 팁과 관련 링크를 제공해드립니다.

쿠버네티스 버전: 오류 메시지에 따르면, 사용 중인 쿠버네티스 버전이 제어 노드와 호환되지 않을 수 있습니다. 스크립트나 CLI 명령어에서 버전을 명시적으로 지정했을 경우 이를 확인하고, 호환되는 최신 버전을 사용하는 것이 좋습니다.

IP Forwarding:

- 오류 메시지 중에

/proc/sys/net/ipv4/ip_forward의 내용이 ‘1’로 설정되지 않았다는 메시지가 있습니다. 이는 IP forwarding 설정이 활성화되지 않아 발생하는 문제입니다. 터미널에서 다음 명령어를 실행하여 설정을 확인 및 수정하세요:

sudo sysctl -w net.ipv4.ip_forward=1

- 오류 메시지 중에

쿠버네티스 설정 파일 확인:

- 환경 설정 파일이나 디렉토리가 잘못된 경로에 있거나 생성되지 않았을 수 있습니다. 사용 중인 스크립트 혹은 강의 자료에서 설정 파일의 경로나 내용을 한 번 더 확인해 주세요.

- 관련 포스트: vagrapt up 실행시 오류 발생 합니다.

- 환경 설정 파일이나 디렉토리가 잘못된 경로에 있거나 생성되지 않았을 수 있습니다. 사용 중인 스크립트 혹은 강의 자료에서 설정 파일의 경로나 내용을 한 번 더 확인해 주세요.

네트워크 및 인증서 관련 설정:

- ‘Unable to load certificate’ 오류는 필요한 인증서 파일이 없거나 경로가 잘못 설정된 경우입니다. 쿠버네티스 구성 시

kubeadm명령어를 사용한다면,--ignore-preflight-errors=all옵션으로 무시할 수 있습니다. 그러나, 인증서와 관련된 모든 경로 및 파일이 적절히 설정되어 있는지를 먼저 확인해야 합니다.

- ‘Unable to load certificate’ 오류는 필요한 인증서 파일이 없거나 경로가 잘못 설정된 경우입니다. 쿠버네티스 구성 시

쿠버네티스 클러스터 초기화:

- 만약 클러스터 초기 설정 과정 중 문제가 발생했다면 클러스터를 초기화(

kubeadm reset)한 후 다시 설정을 시도해보시는 것도 하나의 방법입니다. 이 작업은 모든 데이터를 삭제하므로 신중히 진행해야 합니다.

- 만약 클러스터 초기 설정 과정 중 문제가 발생했다면 클러스터를 초기화(

이와 관련된 문제가 자주 다뤄졌으나 세부 사항들이 다르므로, 다른 사용자 질문도 참고하셔서 해결 방법을 찾으시면 도움이 될 것입니다.

혹시 설정 스크립트나 자료에 문제가 있다면 학습하셨던 자료의 최신 버전을 확인해 보시고, 지식 공유자에게 직접 문의하실 수도 있습니다. 모든 설정이 올바르고 여전히 문제가 지속된다면 추가적으로 환경 세부 사항을 함께 공유 부탁드립니다.

저는 질문자님의 이해를 돕고자 지식공유자님의 기존 답변을 참고하여 유사한 답변 링크를 추천해드리고 있습니다.

현재 베타 기능으로 답변이 만족스럽지 않을 수 있는 점 양해 부탁드립니다. 🙏

추가적으로 궁금한 점이 있으시면, 이어서 질문해 주세요. 곧 지식공유자께서 답변해 주실 것입니다.

참고로 vagrant up을 하고 바로 들어가서 하신게 아니라 껐다가 키신거면 1이 0으로 바뀌어서 되지 않습니다....(이게 원인 같은데요..? / 코드를 간편화 시킨다고 echo로만 하도록 변경했...는데... --;; 이게 사이드 이펙트인가..)